Five Go Concurrency Patterns, every Go Developer must know - Fan-Out, Fan-In

Multiple goroutines for high throughput and collect result.

This is a part of a multi-part series on Go Concurrency Patterns:

Go Concurrency Patterns - Fan-Out, Fan-In

The Fan-Out, Fan-In pattern in Go is a concurrency model where:

Fan-Out: A single producer (goroutine) delegates work to multiple worker goroutines that process tasks concurrently. In other words, Fan-out is the practice of starting multiple goroutines to process data coming from a single channel. It involves distributing the work across multiple workers to improve parallelism and throughput.

Fan-In: Results from multiple workers are aggregated into a single output channel for further processing. In other words, Combine outputs from multiple sources (channels) into one. The combined output can be an aggregation of results, or it can simply be a merged stream.

Scenario:

In an e-commerce platform, consider the task of synchronizing product inventory data from multiple warehouses. Warehouses have APIs providing product stock updates. These APIs can be slow or rate-limited, and the data must be processed concurrently to minimize delays.

So, We want to:

Fetch inventory data from multiple warehouse APIs concurrently (Fan-Out).

Aggregate the stock updates into a central database (Fan-In).

Solution:

fetchInventory: The below `fetchInventory` method simulates the functionality of fetching inventory from external warehouse. Currently this only simulates a dynamic delay and returns three stock inventory with varying random quantities. This will usually be implemented with a service client or a queue client to get inventory updates in real implementation.

worker: Worker is the Fan-out goroutines to concurrently process multiple warehouses identified by warehouseID. Once Inventory is received, it updates/writes the inventory to the same output channel `out`. From the main function below, we spinup 5 workers, so 5 concurrent workers will run reading from each warehouse at the same time - and result of all the workers are written to the same output channel.

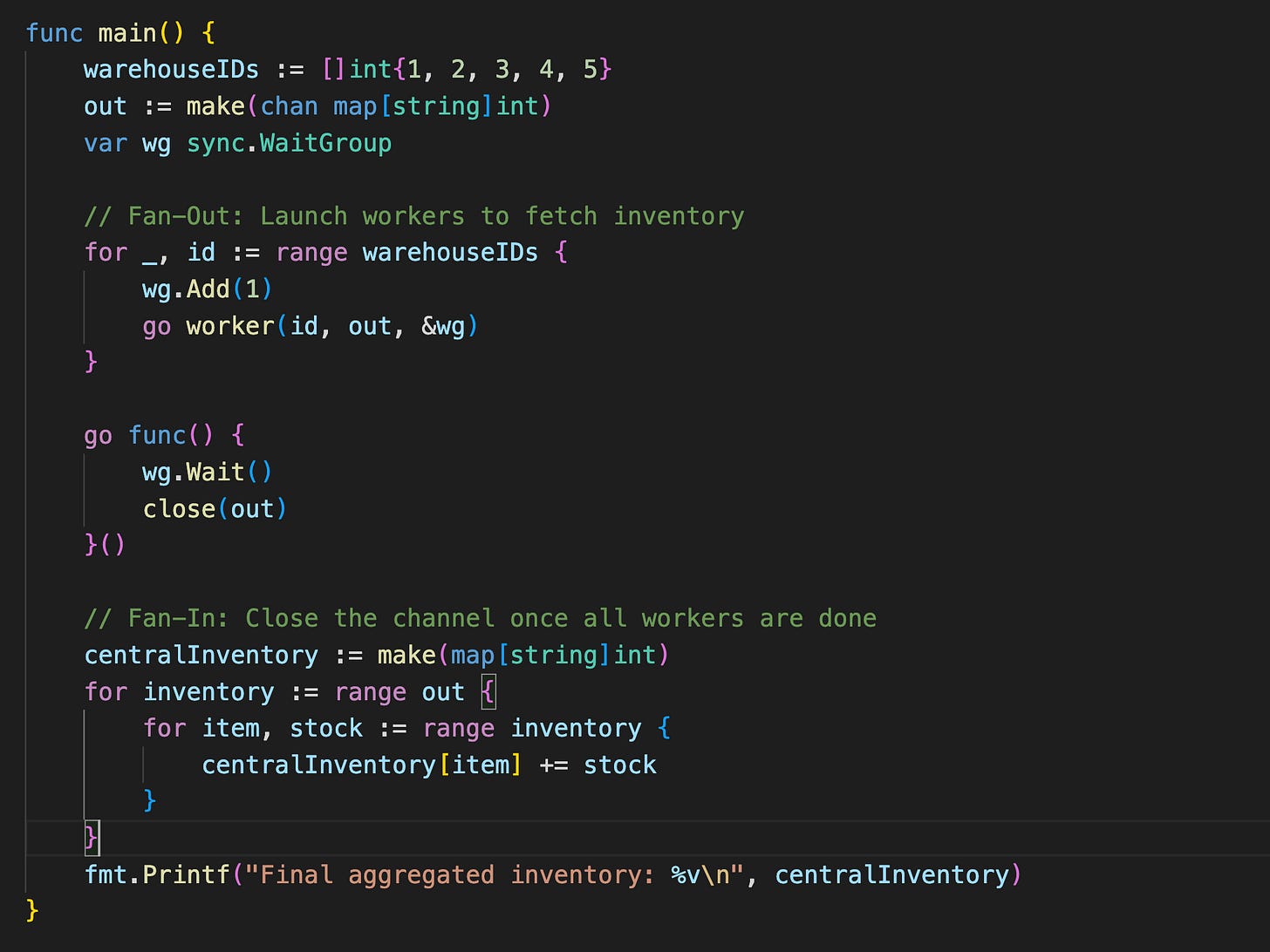

main function: we declared a slice with 5 warehouseIds and spinup 5 separate goroutines (Fan-out) to process all the warehouses concurrently. We setup a waitgroup to wait for the completion of all the 5 goroutines and closeout the `out` channel.

At the end, we are continuously read inventory from the `out` channel (Fan-In) whenever each worker write an inventory. we collect the inventory and aggregate the quantity in a separate centralInventory map. When the waitgroup completes in the above goroutine, then it closes the `out` channel - leading to terminating the below for range loop on the `out` channel. Finally we display the aggregated inventory result.

Sample output:

Best Practices

Channel Buffers: Use buffered channels if the producer and consumer speeds differ significantly to prevent blocking.

Worker Pool: when number of warehouses increases, consider implementing worker pool pattern to restrict the number of workers to avoid resource exhaustion.

Error Handling: Enhance the worker function to handle and report errors from API calls.

Timeouts: Use

context.WithTimeoutto ensure no goroutine hangs indefinitely due to unresponsive APIs.Rate Limiting: Implement rate limiting if warehouse APIs have request caps to prevent overloading.